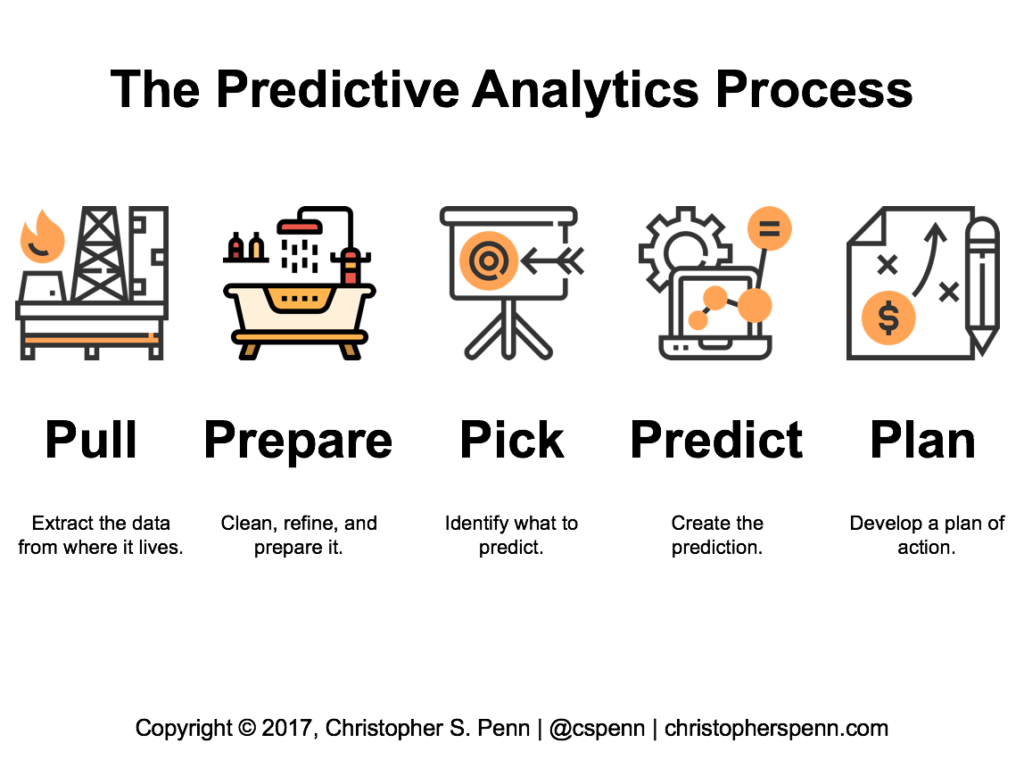

In the last post, we looked at the consequences of having poor process in our predictive analytics practice. Let’s look at the first step of that process now.

Pull

If data is the new oil, pulling data is analogous to drilling and extracting oil from the ground. We need to identify what data sources we have available to us, understand what condition the data is in and whether it’s suitable for predictive analytics, then move it to processing.

We have two categories of data we access for prediction: public and private.

Public Datasets

Public datasets are important for providing additional context to our data. With public data, we enrich our data with external knowledge that can help explain what we see in our data.

For example, suppose we’re a coffee shop. We want to predict the number of walk-in customers likely in the next year. It would be helpful to inform our training data – the historical data we build and train our software on – what the weather was like, or what the economy was like at any given point in the past.

The list of public data sources is gigantic, but some of my favorites for prediction include:

- Google Trends: a useful source for search data

- Data.gov: the US government public repository with connections to hundreds of thousands of datasets

- FRED: The St. Louis Federal Reserve Bank with thousands of time-series datasets on the economy

- Kaggle: A terrific portal for datasets to learn from

- Google BigQuery: Google stores massive datasets such as news, books, etc. and makes them available to the public

- National Weather Service: Meteorological data is available for the entire planet; for the United States, data is available as far back as the late 1700s

Hundreds more datasets are available and curated by data scientists around the Internet.

Private Datasets

Our private datasets are internal data from all our business systems. These private datasets are often what we want to predict. The biggest challenge with most private data is access; as organizations grow larger, access to data becomes siloed within the organization. Only executive sponsorship can keep access to data open, so be sure that’s part of the predictive analytics process.

The second biggest challenge for many organizations’ private data is data quality. Cleaning up the data or improving its collection is a vital part of preparation for prediction.

Some of the internal data we most often want to predict includes:

- Revenues

- Cash flow

- Expenses

- Customer service inquiries

- Website traffic

- Lead generation

- Staffing levels

- Email marketing performance

- Ad performance

- Demand generation

How to Pull Datasets

We will most likely need the help of a developer with API experience and a database architect to successfully pull data. The developer will connect to data sources, public and private, and write code that will extract the data on a regular basis for us.

That data has to go somewhere, so our database architect will help contain the data in a usable format. While many data scientists and machine learning experts love massive database storage systems like Hadoop and Apache Spark, we can make accurate and robust predictions from nearly any database as long as it’s clean and high-performance.

Next: Prepare

If data is the new oil, we’ve now got a supply of crude oil. However, crude oil is useless without refining. In the next post, we’ll look at data preparation. Stay tuned.

You might also enjoy:

- You Ask, I Answer: Retrieval Augmented Generation vs Fine-Tuning?

- Almost Timely News, January 14, 2024: The Future of Generative AI is Open

- You Ask, I Answer: AI Music Collaborations and Copyright?

- Mind Readings: Hacking Social Media Algorithms

- Mind Readings: Most Analytics Data is Wasted

Want to read more like this from Christopher Penn? Get updates here:

Take my Generative AI for Marketers course! |

Leave a Reply